Deliver AI notetakers your users can trust

Your users know the difference. Give them intelligence they can trust.

Build meeting intelligence that works consistently across real-world conversation scenarios.

Integrate speech recognition built specifically for meeting intelligence and conversation analysis.

Scale your meeting intelligence with speech recognition infrastructure designed for high-volume applications.

Learn why today’s most innovative companies choose us.

Modern AI notetakers need more than basic speech-to-text functionality.

Reliably detect multiple speakers and what they’re saying with the highest accuracy in the industry.

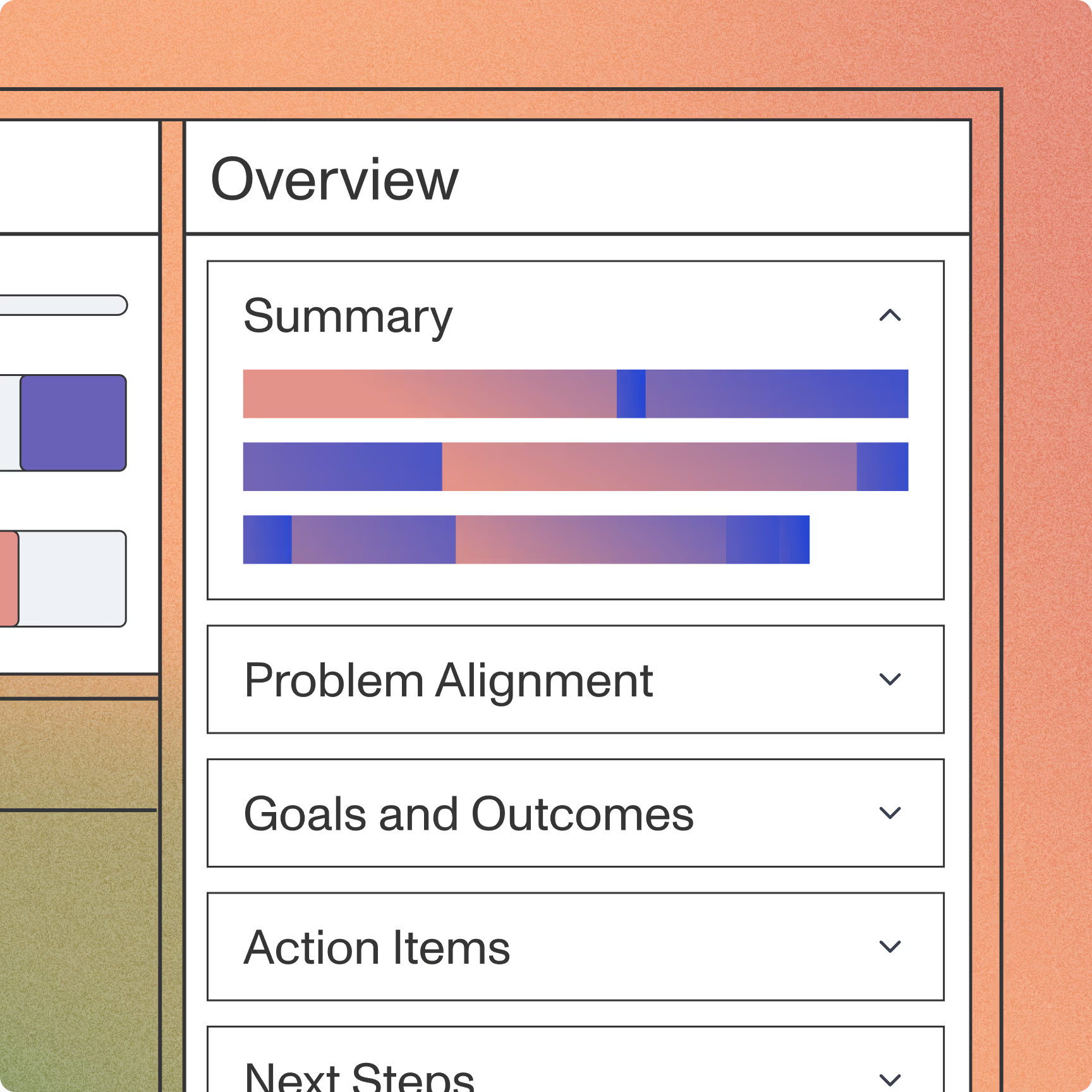

Turn hours of audio into concise, actionable insights with automatic summarization.

Capture speaker sentiment accurately for informed business decisions and problem solving.

Get granular timing data to sync conversation analysis and improve task automation.

Spot trends and ares of importance by identifying key conversation topics.

Safeguard sensitive information automatically to ensure privacy and compliance.

Deep dive into the latest insights, trends, and industry breakthroughs for all things conversation intelligence.

AssemblyAI supports meeting transcription with speaker diarization, real-time (sub‑second) and asynchronous STT, automatic summarization, word‑level timestamps and confidence scores, and optional PII redaction. Diarization results are returned in transcript.utterances for easy speaker‑segmented text.

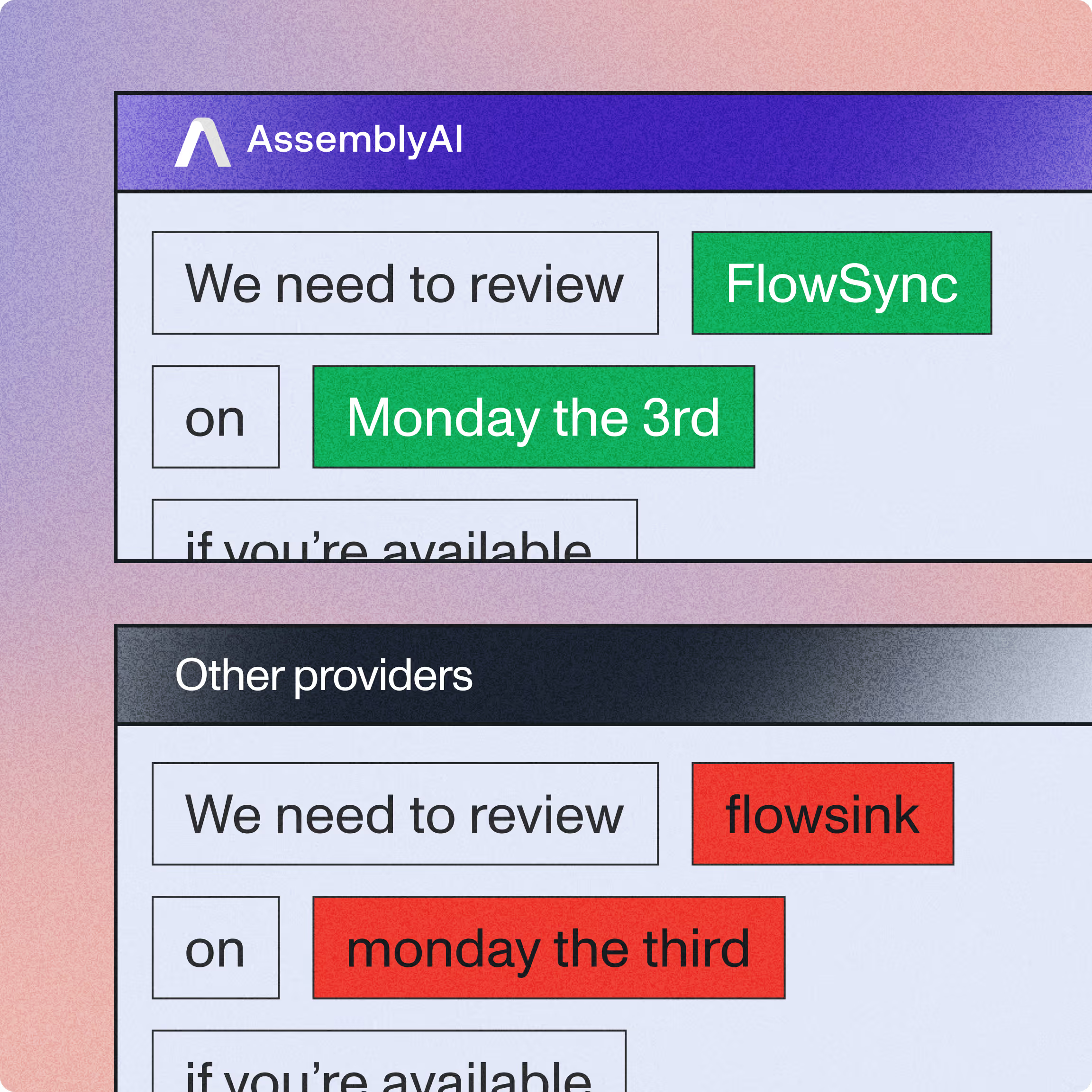

AssemblyAI reports industry‑leading meeting transcription accuracy: a 93.32% Word Accuracy Rate and 30% fewer transcription errors than alternatives. It also reduces diarization errors (64% fewer speaker counting mistakes) helping reliably attribute who said what.

Yes. AssemblyAI’s Streaming Speech-to-Text returns results in a few hundred milliseconds, enabling sub-second latency. Core pages specify ~300 ms “immutable transcripts” for voice agents and sub-second real-time performance for live meeting notetaker use cases.

Enable diarization by setting speaker_labels=true. AssemblyAI segments words into chunks, computes speaker embeddings, and clusters them to assign speaker turns across the file. Output labels are generic (Speaker A/B/C). Typically ~30 seconds of speech per person is needed; brief replies may be merged. Labels aren’t consistent across files by default.

Create an account and get your API key. For recorded meetings, use an SDK to call client.transcripts.transcribe({audio: file/URL}); SDKs poll for completion or use webhooks. For live meetings, upgrade your account and use StreamingClient to connect and stream audio for real-time transcription.

Yes. AssemblyAI provides documented integrations with meeting ecosystems like Zoom RTMS and Recall.ai (for Zoom meeting bots), and supports LiveKit for voice agent use cases. For downstream workflows, no‑code options like Zapier and Power Automate let you pipe transcripts into 5,000+ apps.

Build what’s next on the platform powering thousands of the industry’s leading of Voice AI apps.